Just when businesses were getting used to the idea of using artificial intelligence for analyzing their data and suggesting actions, a new domain has come in and is ruling the space. This domain, which is quickly becoming a technology in itself, is expanding AI’s capability to a stage where it can write content, create music, and design images.

Famously known as generative AI, the concept exploded in the late 2022 when OpenAI, a San Francisco-based technology company, launched Dall-E- an image generator, and ChatGPT – an AI chatbot, which enabled people to use them for creating art or text. Following the mass success, competitors also responded in kind and flooded the market with similar products.

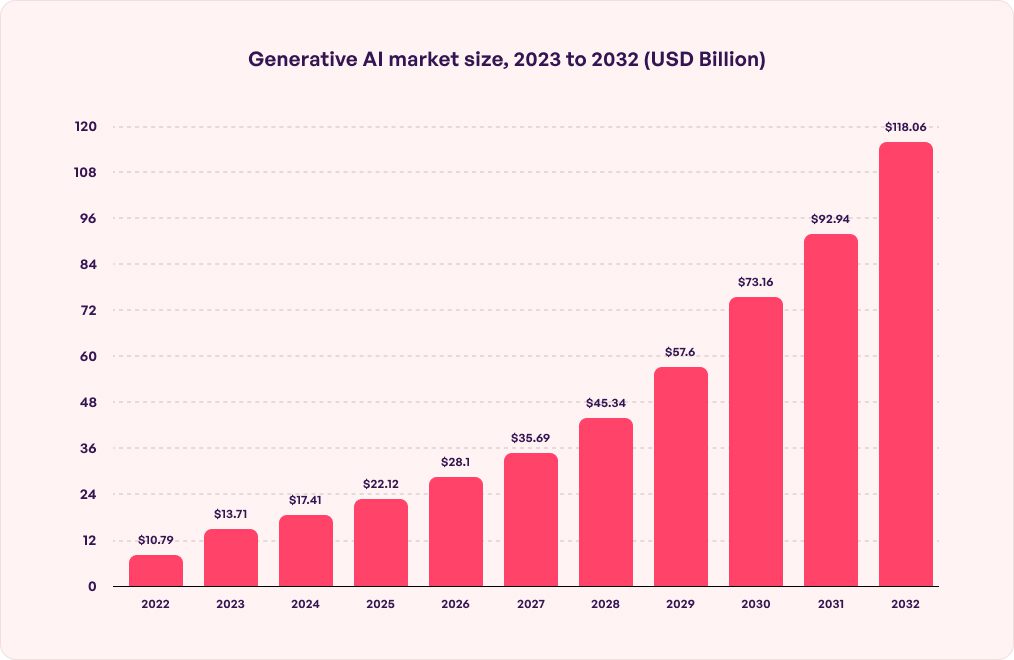

The demand which started last year is nowhere close to being slowed down, especially with it getting ready to reach US$207bn in 2030. In a situation like this, the technology has garnered a lot of attention with very few people actually knowing what it does.

We are here to change that. In this article, we are going to dive into the concept of what is generative AI to its entirety, where we dive into its different models, benefits that it offers, the limitations, and high-level use cases.

Now before we look into the different components of the technology, let us look into the factors that differentiates Generative AI and traditional AI.

Table of contents

Generative AI vs Traditional AI

While a subset of artificial intelligence, generative AI is fundamentally very different. On one hand, traditional AI deals with analyzing data and coming up with suggestions, on the other, generative AI is about creating content – music, text, videos, and images.

Let us look into the details of their differences.

| Feature | AI | Generative AI |

| Purpose | Perform tasks which need human-like intelligence | Generate new data or content |

| Output | Predictive outputs based on past data | Creative outputs |

| Application | Natural language processing, robotics, and computer vision, etc. | Computer graphics, data augmentation, music generation, etc. |

| Learning source | Labeled, unlabelled, and partially-labeled data | Unsupervised and semi-supervised methods without explicit labels |

| Limitations | Understanding context and managing ambiguity | Low quality and biased data |

How does generative AI work?

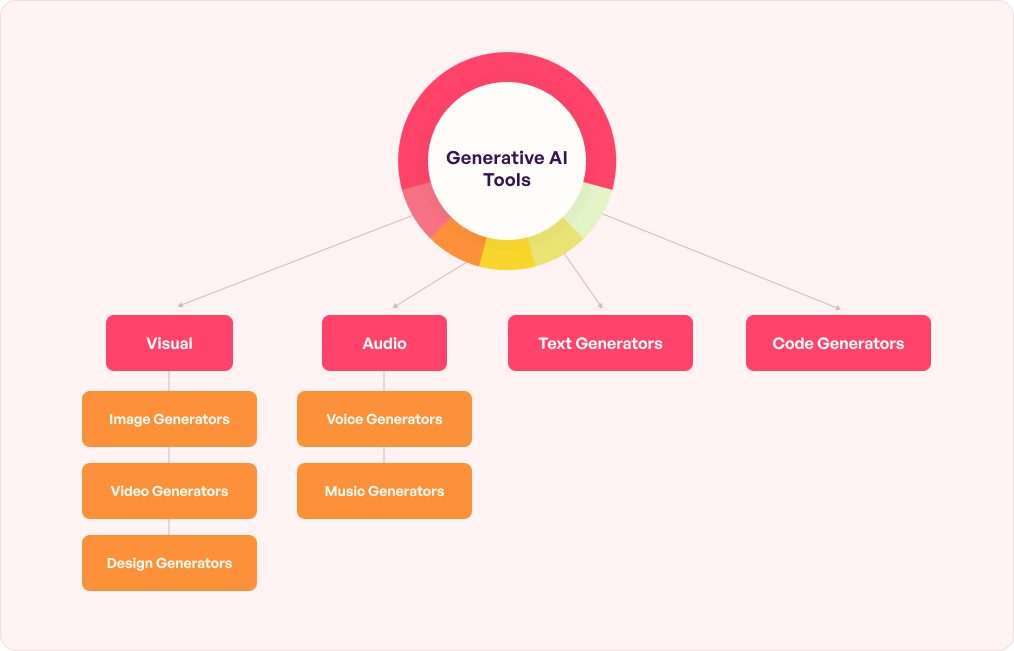

Generative AI tools are developed on underlying AI models, like the large language model (LLM), neural networks, and other intricate algorithms to create fresh content.

The model and method implemented in the generative AI usually varies on the basis of the desired output.

For example, for video and image generation, GAN (generative adversarial networks) are used. It consists of two neural networks – generator: responsible for content creation and discriminator: that takes care of assessing the output quality. These models typically collaborate through a feedback loop that generates output with high realism.

While it is extremely difficult to explain the complexity of how does generative AI works, here’s a rough walkthrough of the process.

- Data gathering and preprocessing: Generative AI models need a massive amount of datasets to learn from. This data is gathered and prepared through converting it in a suitable form for the model. For example, text data might have to undergo tokenization, and the images may get resized or normalized.

- Model architecture selection: There are a large number of generative models that exist, like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and autoregressive approaches like Transformers. The choice of architecture ultimately depends on the business’s specific data type and problem.

- Model training: Generative AI model is usually trained with processed data. Through unsupervised or self-supervised learning approaches, the model recognizes and understands patterns like the creation of sentences or the composition of shapes and colors in an image.

- Sampling and generation: After the model gets trained, it can be used for generating new content using sampling from learned distribution. For instance, a text generation model takes in a seed phrase and then generates continuation on the basis of its language patterns’ understanding. The image generation model can easily take in random noises and then create a new image on the basis of the visual patterns that it has learned.

- Fine-tuning and evaluation: The generated outputs get evaluated for diversity and quality. The models can be fine-tuned by modifying hyperparameters, adjusting the architecture, or even training it for longer time frames to better the performance.

Now that we have looked at the high level working of the different types of generative AI, let us get down to the models that make it possible.

The key generative AI models

In order to truly understand the technology and the different generative AI applications, it is critical to know the models that work behind it. Here they are –

Generative Adversarial Networks (GANs)

GANs consist of a generator and discriminator network which tend to compete with each other. The generator works on the output of fake samples that resemble real data, the discriminator is made to distinguish reality from fake data. This feedback-loop game helps the GAN model with generating sharp, realistic outputs.

Variational Autoencoders (VAEs)

VAEs are a type of probabilistic model which learns data via encoding and decoding. These models encode a data point in a lower-dimension latent vector from where the data can get reconstructed. They can even help generate new data through sampling random vectors from their latent space that is learned from data.

Autoregressive models

These sequential models are built to predict the next data point that has been conditioned on the basis of all the previous ones. Each token/pixel is dependent on the earlier generated ones. The examples comprise of Transformers and RNNs. They are popularly adopted for audio/text generation by modeling conditional probabilities.

Flow-based models

Taking inspiration from probability flows, these models tend to connect simple source distributions with complicated target distributions with the help of a chain of invertible transformation. They enable precise log-likelihood computation and sampling from their target distribution.

Rule-based models

In place of learning the patterns implicitly, the models implement grammar, rules, or parameters that synthesize outputs such as text. They are known to help with programming logic for generation instead of learning from datasets.

Based on these generative AI models, a number of generative AI applications and tools have entered the market.

Some platforms that are emerging as the top examples of the technology include

- ChatGPT

- Character.AI

- Scribe

- AlphaCode

- GitHub Copilot

- Bard

- Cohere Generate

- Dall-E2

- Claude

- Synthesia

- Duet AI

The reason behind this ongoing rise in generative AI applications and tools can be attributed to the ever-growing list of generative AI use cases. Let us look at both industry-level and business-specific usage of the technology.

The use cases mentioned above are only on-the-surface applications of the extent of generative AI development services. With new tools coming in almost everyday, it is safe to assume that while you read this, an entrepreneur would be working on a new use case that would address a business-specific or industry-grade application.

Amidst the many use cases and obvious benefits of generative AI which the business world has already experienced, there are also some associated risks.

Risks and concerns associated with generative AI

If you have worked with any generative AI application, you would know that the tools make up things which have little to zero connection with truth. While it works for businesses with very little online presence, medium to large-scaled companies can face the consequences of harmed performance and reputation when they don’t run a validity check.

Here are some other risks associated with generative AI.

Understanding context

Generative AI tends to struggle with grasping the context of the inputs that the users have shared. This can lead to occasional outputs having nonsensical responses.

Actual creativity

Although the technology can imitate styles, generative AI doesn’t have actual imagination, creativity, and emotions. All it does is depend on data and patterns to create content, instead of actual inspiration.

Bias

Generative AI takes on the biases present in the data that it learned from. This can result in a situation where biased output gets generated leading to more societal prejudices.

Hallucinations

One of the biggest challenges of this field is how it makes up scenarios, data, and stories based on the data they are built on. Powered by intelligent AI models and LLMs, the technology is able to generate false content on the basis of its understanding of a context.

With this, we have looked into the many facets of the technology, including the comparison of generative AI vs traditional AI. Now for a business person reading this, we are sure you would be looking for ways to enter the space now – a time when the scope of innovation is ripe.

This is where our AI development company in Texas comes in. We have extensive subject-matter expertise in building generative AI chatbots like Character.AI and implementing the feature in your existing applications. Get in touch with our team today to set up your generative AI backed project.