The noise around GenAI is quite loud. This is evident since a multitude of businesses that had earlier used artificial intelligence in limited areas wish to expand usage in 2024. This steadfast adoption of GenAI is for its superior capabilities to solve real-life problems with advanced techniques and rich transformative power. It has been possible with the co-evolution of :

- neural network models and

- computer processing power.

GenAI has emerged as a monumental force in helping brands adapt to consumer preferences and needs, ensuring data-driven predictions, and streamlining content creation. However, businesses still do not have clarity in how to deploy this technology without inviting ethical or legal litigation. So, know what the Biden-Harris administration is doing about it.

Table of contents

Safe AI implementation across the nation: here’s how.

Vice President Kamala Harris announced an OMB first-government wide policy. It includes information regarding the following:

- harnessing all possible benefits of AI

- handling the risks that come along.

- terminating non-compliance AI usage by December 1, 2024

This is a part of President Biden’s Executive Order on AI for privacy and data governance in the public sectors. In short, it’s a solid effort to ensure and intensify responsible AI usage.

Illegal and unethical usage of AI could be concerning for all industries that are getting a facelift with GenAI like banking, healthcare, and travel. So, let us learn the different legal and ethical considerations of GenAI. Knowing the checkpoints beforehand will help avoid unnecessary roadblocks in business.

Why should businesses prioritize meeting legal and ethical concerns of generative AI?

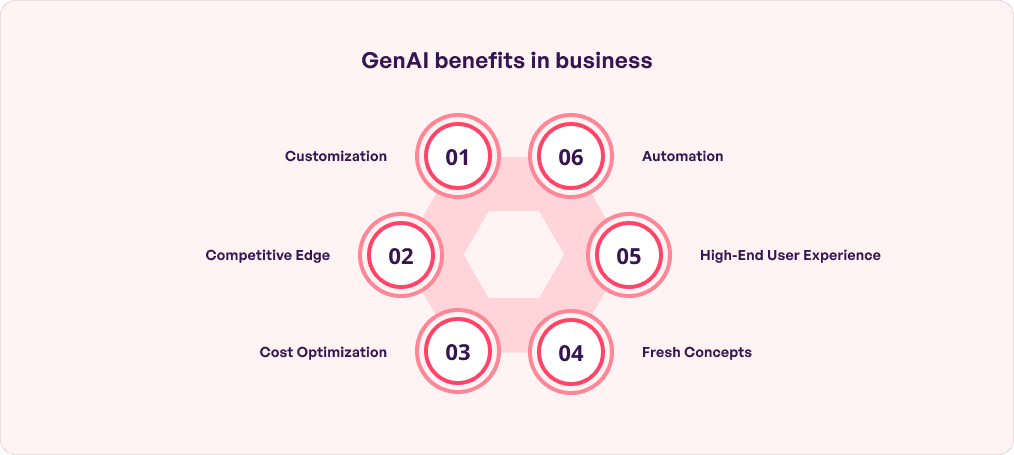

AI is changing how businesses are being done. A survey by PwC found that 54% of companies across the world have already implemented GenAI. It is heavily shaping the future of multiple industries as they are putting AI to work in different verticals. Its top business benefits include realistic simulations, data synthesis, adaptive learning, hyper-personalization, and time and cost savings.

However, although GenAI adds colossal value to multiple domains, the potentials are often misused by fraudsters. The worst that is happening is innocent images are being manipulated into explicit content. A significant surge is also visible in:

- privacy breaches

- system loopholes exploitation

- unauthorized data access, and

- creating highly sophisticated yet fake content.

No wonder, as less as just 43% of Americans trust AI tools to be fair. Rest of them feel threatened that this technology is the primary reason behind cybersecurity issues, spam, and misinformation. The good news is that you can address these thoughtfully.

To begin with, following codes of legal standards and ethics in generative AI is mandatory. All parties must also establish specific norms and guidelines for safe deployment of the GenAI. It will help gain public trust since their personal information is safe. Meanwhile, you stay protected against data violation and avoid financial and reputational damages which are otherwise a high price to pay for a business. Lets now move on to the list of ethical and legal considerations of generative AI.

What are the ethical concerns of generative AI ?

Powerful technologies, development frameworks, cybersecurity, and trusted administrative decisions are the core components of an AI-based business. You have ticked them all off but competitors are still a step ahead? What else are they doing right in the GenAI ecosystem? Well, your fellow brands are navigating the ethical dimensions alongside. This transcends the legal guidelines when it comes to consent, accountability, and transparency for customers. And you must do the same.

Below are a few ethics in generative AI that you can consider implementing – whether you are a start-up or an enterprise.

Privacy and data security

This is central to ethical considerations in generative AI. Most generative models are trained on personal data and come with risks of data breach. This is because it gets the user information when they are shared with third-parties sources via:

- sell products and services

- host polls and surveys

- conduct researches like medical analysis

- find valuable insights about website interactions.

While this is going on, cybercriminals keep an eye for the right moment to strike! They infiltrate weak security systems and steal passwords, debit and credit card details, social media profiles, insurance, financial and healthcare information, and work logins. So, as a responsible company, you must have robust cybersecurity measures in place to adhere to the ethical considerations of generative AI.

Lack of accountability

GenAI produces harmful content at times. The problem arises when nobody is ready to take accountability of the same. Analysts come up with reluctant and casual explanations. However, you as an entrepreneur must be able to offer a plausible answer for the results that the chatbot provided. If not, it is a good idea to avoid touching topics to train your model that might strongly impact communities and livelihoods.

Sensitive data leak

Brands should share data extremely carefully. This is especially true for sensitive and confidential ones like health records or financial information. This helps avoid GenAI-related malware attacks and leaks. Further, extract only the necessary information. Clean non-essential details from the system before processing which is a process called data minimization.

Hallucinations

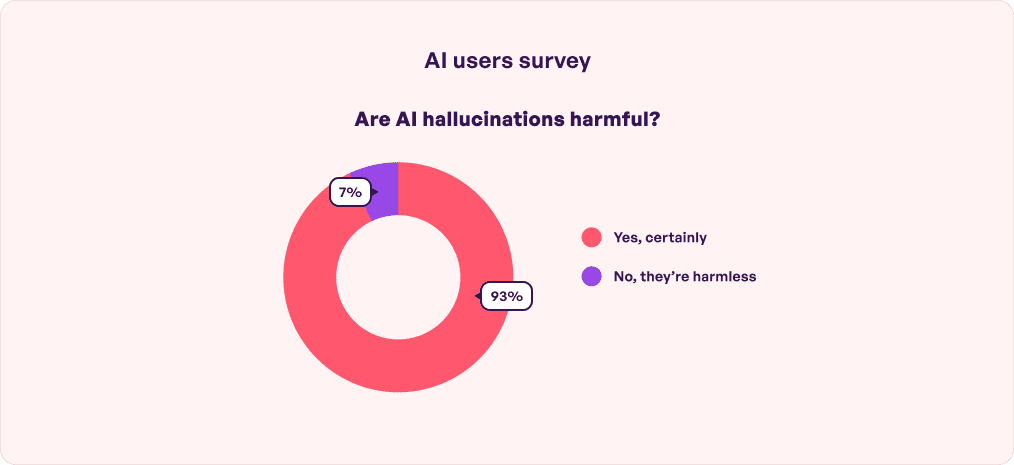

GenAI lacks common sense. So, the chatbots hallucinate around 3% to 27% of the time. This means, it confidently delivers results that have no connection to the real world. It mostly happens due to insufficient training data and incorrect assumptions. Buw why does it happen? Well, the bots misinterpret the prompts since the training data is either biased or simply too complex to understand. And this clearly exposes the limitations of GenAI that it can only mimic but not truly comprehend.

Inventing and generating irrelevant content prove dangerous in the fintech or healthcare field. If acted upon, it can compromise customers’ health and wealth – the two critical aspects of life. So, how to ensure accurate information and tick off this ethical consideration of generative AI? Well, incorporating eagle eye human feedback is a fantastic way out to catch flaws and rectify them.

Strong biases

Based on their algorithm and training data, GenAI might come up with discriminatory information. They amplify the content and make it worse than it is in reality. This impacts individuals and communities quite negatively. Here’s proof.

A study by Bloomberg found that an analysis of 5,000 images made with Stable Diffusion (GenAI) took racial and gender disputes to an unimaginable level. This means the technology can form biases on its own, depending on its interpretation skills. This includes racial stereotypes, religion-based derogatory content, or hate speeches and imageris. All of these have major consequences like tarnishing your brand reputation so you end up losing both revenue and the customer base.

Questionable content

GenAI is backed by Big Data. It provides humongous and diverse datasets that are used to train the models and help them identify patterns and correlations. While this sounds good, there are chances of this data containing questionable and offensive content. For instance, Google Photos had accidentally labeled black people as Gorilla. This sparked hatred and unrest back in 2020. This is because it has proved that technology is ultimately made by humans with a discriminatory mindset. This incident also highlighted the limits and shortcomings of AI. Further, the data used to train GenAI might also lack proper consent from the original creator.

One way to avoid this is to make sure the data is being treated as synthetic and is only used to test GenAI. As a company, you must strive to audit the training data with iterative testing from time to time. This helps maintain the data quality. Otherwise, simply keep the data collection to the minimum and take consent from users specifying why and how the data will be used for your company.

To be in line with the principles of fairness and inclusivity, get your GenAI solution built by software or mobile app developers who conduct rigorous audits keeping the ethical implications of generative AI in mind. Further, consider human reviews for highly sensitive information. This way you are doing your bit to help GenAI represent human values like compassion and empathy to build a kinder world.

Transparency and liability issues

This is a critical generative AI policy to help investors, employees, and customers spot biases and correct whatever is going wrong. However, GenAI follows the ‘Black Box’ system for information analysis and delivery. This is when only inputs and outputs are visible but the internal processes are opaque. There is little or almost no knowledge regarding how the answers are being generated or the AI’s decision-making procedure, in general. This reduces the chances of accountability. An effective way to tackle this complexity is to conduct regular assessments and audits via humans. This way you will be able to explain the AI decisions to bring back stakeholder confidence.

How does Simublade help in solving all of the above?

Simublade is your trusted AI development company with a team of seasoned professionals dedicated to satisfying your business needs by building highly transformative GenAI. Our technicians provide tried and tested solutions with proper introspection and ethical checks to deliver the best possible results to your business.

This is done by accurately training the model on highly accurate, realistic, and unbiased data through high-end testing to ensure compliance. A deep-level analysis and filtration is followed which ultimately makes sure your GenAI model makes the minimal errors.

What are the legal considerations of generative AI?

Federal agencies across the USA have implemented laws regarding the acquisition, use, and development of GenAI. Understanding the legal boundary can ensure Americans’ right to safety for which brands must eliminate algorithmic discrimination and supervise AI impacts. The laws also encourage forming a legal framework when it comes to prevention of any AI-generated inappropriate content or data distortion. This is also a way for them to avoid legal repercussions. Below are a few top considerations.

General Data Protection Regulation (GDPR)

GDPR formed in the EU, is the strongest data protection law in the world. It applies to any US business that is operational in the EU or handles the data of its citizens. Businesses like you must understand the intersection between GDPR and AI. This means, personal data must be handled in a way that protection from breaches is guaranteed. For instance:

- reducing the data’s personality via pseudonymisation

- lawful re-identification of data or creation or new personal data

- Deploying AI tools in a way that human skills and organizational norms are also taken into account to preserve individual interests.

GDPR establishes legal principles for safe usage of AI. These are the rights and social values that are embedded in national constitutions and EU treaties. As an entrepreneur or project manager, do get a clear idea of the different GDPR provisions that are relevant to AI. It will help you develop principles of data protection that align with the benefits of using artificial intelligence like GenAI for your business improvements.

European Union Artificial Intelligence Act

This EU AI Act is applicable to all US companies offering AI-related services to EU citizens. It is necessary since the nation is yet to formulate stringent data privacy policies in the tech space, similar to AI Act or GDPR. AI Act is application to three significant areas:

- regulation of high-risk applications

- applications that are not termed as high-risk

- applications that possess unacceptable risks.

2The European Union Artificial Intelligence Act received a greenlight from the Council of the EU on May 21, 2024. This recent approval is a good reason to start learning and understanding what it is. Rest assured that it will be helpful since the act is now a global standard instead of being limited to the EU.

Intellectual property protection

IP threats have significantly increased in the AI era. The technology is being rapidly used to:

- generate clones of copyrighted material

- models are being trained on original work

All of these, without recognition of the owner or compensation. This unlawful copying can turn out to be a risky affair since cybercriminals and businesses have to face legal battles for doing the same. For instance, The New York Times sued Microsoft and OpenAI in December 2023 for training their chatbots on multiple Times articles for trusted information.

Therefore, avoid any such controversial actions. Get an idea of the USA Copyright Act beforehand to understand the basic framework of the law. Only the owner has the exclusive right to copy, distribute, and make derivative works among others. Train your GenAI in a way so that it does not scrape information like original sound recordings, music videos, designs, articles and similar work to avoid infringement lawsuits.

Confidentiality rights

Proposed American Privacy Rights Act of 2024 is a comprehensive privacy and confidentiality bill. It is to establish consistent national consumer data privacy rights and eliminate the loose state-by-state comprehensive data privacy laws. The aim is to put people in control of their data to protect both American adults and kids.

Enterprises must know that AI will be governed under this law, including private rights of action to consumers. Automated decision-making and additional obligations on popular social media brands will also be in place for Americans. ARRA is all set to surpass state privacy laws making standardizing a single national privacy law. However, these proposals are not yet finalized and will be fulfilled in years to come.

Owing to this upcoming law, businesses must take consumer privacy seriously. Developers will be required to design their models in a way that it is not a threat to individuals. Further, brands have to offer ‘opt out rights’ which means consumers will have the right to opt out of personal information usage. They are also required to take consumers’ consent before collecting and using biometric or genetic data.

Consequence of violating legal and ethical generative AI policy

Brands are the victims of hackers using AI to break into their system. They are targeting backdoor vulnerabilities, API exposure, and improper configuration. No wonder, phishing and ransomware are so common in 2024. Non-compliance with the laid down standards for AI usage can be costly enough to disturb the operations budget and lead to long-term debts. Here’s proof. An average data breach cost for businesses worldwide was $4.45 million while leaked data cost approximately $165 per stolen data. This leads to extraordinary loss. Check the following list to get an idea.

Battered fame

Customers, clients, and partners have offered you their data. In case of data leaks and misuse by AI, they are likely to lose trust in your brand. This will lead to product and service discontinuation and reputational damage. Stakeholders can also sue on the grounds of unfair business practices. These can push your business behind competitors and should be avoided. Here’s proof. Studies have found that 95% of entrepreneurs are of the opinion that reputation management is the key to successful business.

Productivity loss

If it has taken 24 hours to spot and stop a data breach, know that a day’s productivity is wasted. The downtime can be longer, depending on how long it takes to fix the situation. Productivity is highly impacted because data is the lifeblood of most online businesses and is used in day-to-day activities. A data hack situation is especially risky for the healthcare industry since it impacts the timeline and quality of critical patient care. Healthcare compliance can also be heavily compromised in the process.

Financial harm

A chapter in the April 2024 Global Financial Stability Report found that the size of financial loss from cyber crimes has grown by $2.5 billion from 2017 to 2024. Other studies say that cyber crime cost will reach $10.5 trillion annually by 2025 which is not quite good news. On a micro level, businesses might go bankrupt from having to pay big amounts to IT teams for rebuilding the integrity of financial data and preventing ransomware. Further, operations closure due to heavy debts due to cybercrime is common for small businesses.

So, how to manage all of the above? Let’s discuss in the next section.

How to make sure GenAI is ethically and legally compliant?

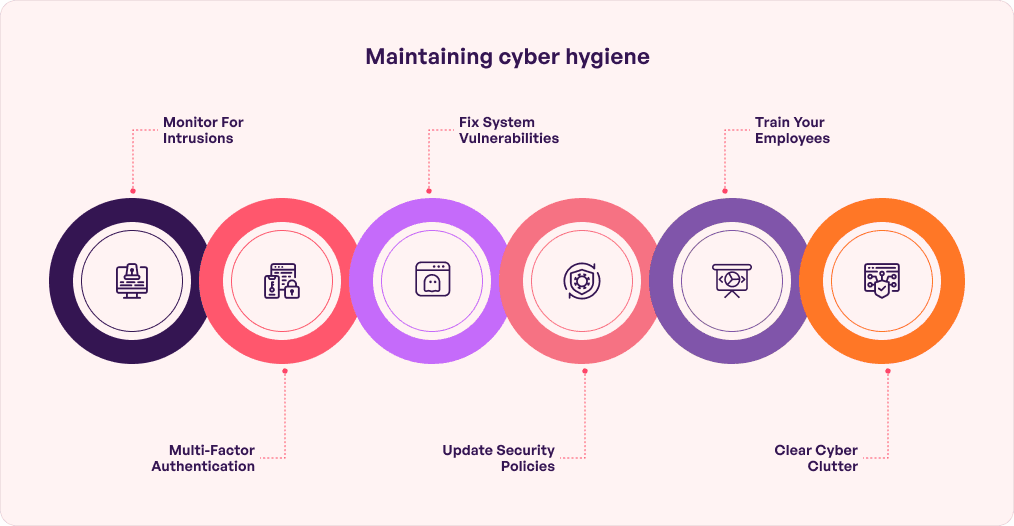

It might not be possible to ensure 100% data protection at all times to be ethically and legally compliant to morals and laws, respectively. However, implementing 360 degree cybersecurity measures to avoid data exploitation by GenAI is half the battle won. It will include following the best practices like :

- conducting regular compliance audits.

- implementing encryption.

- password policy implementation

- enable firewall protection

- advanced threat protection

Further, keep all your software updated with the latest security patches. Train your AI solution to block suspicious user access or IP addresses before the issues escalate. Take time to make the best use of GenAI to analyze historical data and risk factors to get dynamic insights on risk prevention. Also, put together a clear plan of remedy for potential AI breaches. In short, be prepared to handle an AI cyber attack. It is a fantastic way to combat AI-powered cyberattacks as well which might not be possible via humans.

For being ethically compliant, ensure that you know what is inside the AI system. This will offer a clear understanding of the volume of data required. Also, research about the inter-country data transfer rules as mentioned earlier in this blog. Test the data that is being fed to GenAI models. Altogether, it reduces the risks of ethical offenses.

At Simublade, we offer cybersecurity measures that protect your business from getting attacked. You grow without financial or reputation losses while staying compliant with all regulatory and industry-level compliance. Our generative AI development services mixed with high-end vulnerability management, cloud migration, and multi-factor authentication makes your brand hack-proof.

What are the future predictions of GenAI?

The path ahead with GenAI is bright. It is all set to improve all layers of tech stack: engineering tools, infrastructure, models, and applications. Here’s the result. A Gartner survey predicted that by 2027, more than 50% of GenAI models will be industry or business-function specific. Further, the domain models are likely to be smaller and trained on well-structured and precise topics. So, as a business, you can tune the GenAI model as per your domain and needs. This reduces the risks of hallucination, inaccuracy, and biases which ultimately can help you stay compliant with ethical considerations of generative AI.

FAQs

Q. What is the legal risk associated with the genAI model?

Ans. Copyright infringement and intellectual property are the top two legal risks of navigating the AI landscape. Taking strong steps against these can help you stay compliant with legal considerations of generative AI.

Q. What are the concerns of generative AI?

Ans. Exposure of sensitive data, image morphing, phishing, and similar privacy issues which have significant ethical and legal consequences lie loss of trust and lawsuits, respectively.

Q. What are the responsibilities of developers using generative AI in ensuring ethical practices?

Ans. Developers must screen the training data for hate speech, harmful information, and inaccurate details. Experts at Simublade are adept with these guidelines and know exactly how to execute them for a seamless experience. This further helps businesses match the ethical and legal considerations of generative AI.

Q. What are some ethical considerations when using generative AI?

Ans. Ethical considerations of generative AI include transparency, fairness, honesty, accountability, and no hallucination.

Q. What are the legal concerns of generative AI?

Ans. Legal concerns of GenAI include loss of intellectual property, breach of private data, and loss of confidentiality leading to penalties or even business closure.